Case Study: Cyberthreats and Informati ...

After completing this reading, you should be able to: Provide examples of cyber... Read More

After completing this reading you should be able to:

Firms today heavily rely on information to run and improve business objectives; this dependency introduces risks that affect the achievability of the organization’s goals. For this reason, no organization is complete without instituting a process that measures, reports, reacts to, or controls the risk of poor data quality.

The problem of missing data manifests when there is no data value in the observation of interest. Missing data can have a significant effect on the eventual conclusions drawn from the data. The following are cases of missing data in the context of a bank:

This occurs when information is input in the wrong way. Data capturing errors can occur when transcribing words and also when recording numerical data. For example, the data entry editor may use a value of 0.02 to represent the portfolio standard deviation in a VaR model, when the actual deviation is in fact 0.2 (20%).

Duplicate records refer to multiple copies of the same records. The most common form of duplicate data is a complete carbon copy of another record. For example, the details of a particular bondholder may appear twice on the list of all bondholders. Such a duplicate is easy to spot and usually comes up while moving data between systems.

The biggest challenge comes in the form of a partial duplicate. In the context of a bank, a list of bondholders may contain records that have the same name, phone number, email, or residential address, but which also have other non-matching, probably correct data, such as the principal amounts subscribed for. Duplicate data may produce skewed or inaccurate insights.

Data inconsistency occurs when there are different and conflicting versions of the same data at different places. On a list of prospective borrowers, for example, there may be an individual with two distinctive annual income amounts. In such a situation, the risk analyst would have a hard time trying to gauge the borrower’s ability to pay.

This occurs when data is sectioned in the wrong category. For example, a company account may be filed under a single person’s contact.

Errors may be made when converting data from one format to another. When converting monthly income to annual amounts, some monthly figures may not be converted into annual figures.

Metadata means “data about data” or data that describes other data. A depositor’s metadata, for example, may contain information about when the account was opened, the last date when the account was analyzed for money laundering, and the number of deposit transactions. Errors may be made when entering such data.

Every successful business operation is built upon high-quality data. Flawed data either delays or obstructs the successful completion of business activities. Since the determination of the specific impacts linked to different data issues is a challenging process, it is important to put these impacts into categories. To this end, we define six primary categories for assessing both the negative impacts incurred as a result of a flaw, and the potential opportunities for improvement that may result from improved data quality:

As high-risk high-impact institutions, banks are not just sensitive to financial impacts. Compromised data may put the bank on a collision course with respect to:

Also known as the Currency and Foreign Transactions Reporting Act, the Bank Secrecy Act (BSA) is U.S. legislation passed in 1970. It is aimed at preventing financial institutions from being used as tools by criminals to conceal or launder their ill-gotten wealth. The Act establishes recordkeeping and reporting requirements for individuals and financial institutions. In particular, banks must document and report transactions involving more than $10,000 in cash from one customer as a result of a single transaction. As such, any data entry mistakes made while recording transaction details can put banks on a collision course with the authorities.

The Patriot Act was developed in response to the September 11 attacks. It is intended to help the U.S. government to intercept and obstruct terrorism. The Act requires banks to perform due diligence and with regard to accounts established for foreign financial institutions and private banking accounts established for non-U.S. persons. In addition, the Act encourages financial institutions to share information with regulators and law enforcement whenever they encounter suspicious transactions or individuals.

The Sarbanes-Oxley Act (or SOX Act) is a U.S. federal law that aims to protect investors by ensuring that any data released to the public is reliable and accurate. The act was passed in the aftermath of major accounting scandals such as Enron and WorldCom that were marked by incorrect data to investors and inflated stock prices. Section 302 requires the principal executive officer and the principal financial officer to certify the accuracy and correctness of financial reports.

According to the Act, financial reports and statements must be:

Basel II accord is a raft of requirements and recommendations for banks issued by the Basel Committee on banking. It guides the quantification of operational and credit risk as a way to determine the amount of capital considered a good guard against those risks. For this reason, the bank must ensure that all risk metrics and models are based on high-quality data.

In order to manage the risks associated with the use of flawed data, it is important to articulate the expectations among business users with respect to data quality. These expectations are defined in the context of “data quality dimensions that can be quantified, measured, and reported.

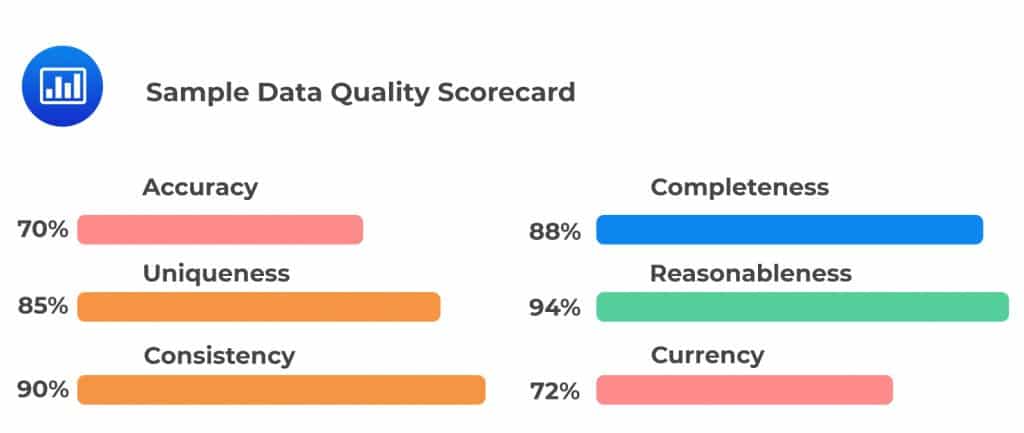

The available academic literature in data quality puts forth many different dimensions of data quality. For financial institutions, the initial development of a data quality scorecard can rely on 6 dimensions: accuracy, completeness, consistency, reasonableness, currency, and identifiability.

As the name implies, accuracy is all about correct information. When screening data for accuracy, we must ask ourselves one simple question: does the information reflect the real-world situation? In the realm of banking, for example, does a depositor really have $10 million in their account?

Inaccurate information may hinder the bank from things such as:

Completeness refers to the extent to which the expected attributes of data are provided. When looking at data completeness, we seek to establish whether all of the data needed is available. for example, it may be mandatory to have every client’s primary phone number, but their middle name may be optional.

Completeness is important since it affects the usability of data. When a customer’s email address and phone number are unavailable, for example, it may not be possible to contact and notify them of suspicious account activity.

Reasonableness measures conformance to consistency expectations relevant to specific operational contexts. For example, the cost of sales on a given day is not expected to exceed 105% of the running average cost of sales for the previous 30 days.

Data is considered consistent if the information stored in one place matches relevant data stored elsewhere. In other words, values in one data set must be reasonably comparable to those in another data set. For example, if records at the human resource department show that an employee has already left a company but the payroll department still sends out a check to the individual, that’s inconsistent.

The currency of data refers to the lifespan of data. In other words, is the data still relevant and useful in the current circumstances? To assess the currency of data, the organization has to establish how frequently the data needs to be updated.

Uniqueness implies that no entity should exist more than once within the data set and that there should be a key that can be used to uniquely access each entity within the data set. ‘Andrew A. Peterson’ and ‘Andrew Peterson’ may well be one and the same person.

This refers to the processes and protocols put in place to ensure that an acceptable level of confidence in the data effectively satisfies the organization’s business needs. The data governance program defines the roles, responsibilities associated with managing data quality. Once a data error is identified, corrective action is taken immediately to avoid or minimize downstream impacts.

Corrective action usually entails notifying the right individuals to address the issue and determining if the issue can be resolved within a specified time agreed upon. The data is then inspected to ensure that it complies with data quality rules. Service-level agreements (SLAs) specify the reasonable expectations for response and remediation.

Data validation is a one-step exercise carried out to establish whether the data complies with a set of defined business rules. Data inspection, on the other hand, is a continuous process aimed at:

As such, the goal of data inspection is not to keep data issues at zero. Rather, the exercise is aimed at catching issues early before they cause significant damage to the business. Operational data governance should include the following:

A data quality scorecard is a fundamental aid for enabling the company to perform successful data quality management. It measures the quality of data against pre-defined business rules over a given period of time. A scorecard has the ability to point out the rule or entry behind a weak/strong data quality score. It enables the establishment of targeted measures that optimize data quality.

Types of Data Quality Scorecards

A basic-level metric is measured against clear data quality criteria, e.g., accuracy. It quantifies specific observance of acceptable levels of defined data quality rule and is relatively easy to quantify.

This is a weighted average of several metrics. Various weights are applied to a collection of different metrics, both base-level and complex ones.

Complex data quality metrics can be accumulated in the following three ways:

Practice Question

Which of the following is NOT a factor to be considered by Higher-North Bank while looking at data quality expectation?

A. Accuracy

B. Completeness

C. Consistency

D. Variability

The correct answer is D.

It is important to determine the accuracy, completeness, and consistency of data. All these factors are necessary for determining the quality of data while variability is not a term included in this list.