Simple Steps to Take to Get Your FRM® ...

Getting an FRM certification will help you advance your career as a risk... Read More

Let’s break it down.

Think of a confidence interval as your statistical safety net. When you’re working with a sample—just a slice of a larger population—you’re trying to make an educated guess about something bigger: a population mean, proportion or some other characteristic.

But how do you express that guess with some level of certainty? That’s where a confidence interval comes in.

A confidence interval (CI) is a calculated range that is likely to contain the true population parameter you’re estimating. Not guaranteed—just likely, based on how confident you want to be. In technical terms, it’s derived from your sample data using a formula that incorporates the sample mean, variability and size. But don’t worry, we’ll get to the math soon.

Now here’s where it gets practical.

Suppose you’re trying to estimate how many hours university students typically study per week. You don’t have access to every student on the planet, so you survey, say, 100 of them and find that on average, they study 12 hours a week. But you also know this average could shift a bit if you surveyed a different group of students.

So, instead of saying “students study 12 hours per week,” you say something more statistically sound:

“We are 95% confident that the true average number of study hours lies between 10 and 14.”

That interval—10, 14—gives your estimate context. It acknowledges uncertainty while still providing useful boundaries. In practice, this approach gives decision-makers something concrete to work with, rather than a single number that might be misleading in isolation.

Why does this matter?

In the real world, we almost never have the luxury of surveying entire populations. Businesses forecast sales based on a handful of customer reviews. Medical researchers evaluate treatment outcomes from limited clinical trials. And exam candidates—especially those tackling the CFA, GMAT, or FRM—use confidence intervals to grasp the reasoning behind data interpretation. In fact, if you’re sitting for any of these exams, mastering confidence intervals isn’t just helpful—it’s essential.

A good confidence interval doesn’t just tell you what might be true; it tells you how sure you can be. It’s a quantitative way of saying, “Here’s my best guess—and here’s the wiggle room.”

Let’s get this straight: a confidence interval is the range of values you come up with. A confidence level is how confident you are that your method for finding that range is reliable.

Think of it this way: you’re trying to guess the average number of hours people spend on their phones every day. You don’t ask everyone—you just take a sample. Let’s say your sample gives you an average of 4.5 hours and based on your calculations, you get a 95% confidence interval of 3.5, 5.5.

That 3.5, 5.5 range? That’s your confidence interval.

The 95%? That’s your confidence level.

But what does that 95% really mean?

Here’s where it gets interesting. A lot of people assume it means there’s a 95% chance the true average is between 3.5 and 5.5. That sounds reasonable—but it’s not quite right.

Once you’ve calculated your interval, the true average is either in it or it isn’t. There’s no longer a probability involved.

Instead, here’s what the 95% means: if you repeated this same process over and over again—with new random samples each time—then about 95 out of every 100 confidence intervals you calculate would contain the true average. It’s not about the specific numbers you got this one time. It’s about the method working well across many repetitions.

Still sounds a bit abstract? Picture this:

Imagine you’re shooting arrows at a target in the dark. You can’t see the bullseye (the true average), but you can fire a hundred arrows (run a hundred studies). You build a confidence interval around each arrow’s hit point. If your method is solid, about 95 of those intervals will cover the actual bullseye—even though you never see it.

That’s the power of a good confidence level: you don’t have to know the truth to be confident your process gets you close most of the time.

And in the world of statistics—especially in finance, healthcare, and exam-level analysis—that kind of consistency is gold.

So how do we actually build a confidence interval?

Here’s the classic formula most commonly used when certain conditions are met (more on that later):

Confidence Interval=xˉ±Z×σn\text{Confidence Interval} = \bar{x} \pm Z \times \frac{\sigma}{\sqrt{n}}Confidence Interval=xˉ±Z×nσ

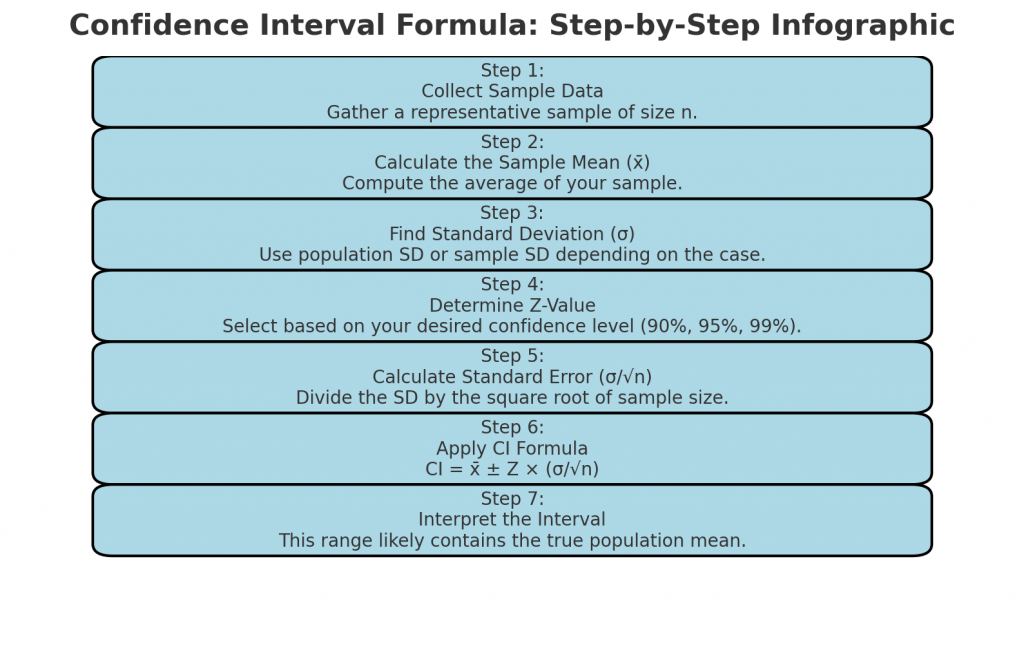

Let’s unpack this step by step:

That term σn\frac{\sigma}{\sqrt{n}}nσ? It’s called the standard error and it’s crucial. It tells you how much the sample mean is likely to vary from the true population mean. The larger your sample, the smaller your standard error—and the tighter your confidence interval.

If you’re using the Z-distribution (which assumes a known population standard deviation and a reasonably large sample size, usually n>30n > 30n>30), here are the go-to Z-values:

| Confidence Level | Z-Value |

| 90% | 1.645 |

| 95% | 1.960 |

| 99% | 2.576 |

So, for a 95% confidence interval, you’d take your sample mean and add and subtract 1.96 times the standard error.

Here’s the core idea: in statistics, we rarely know the exact population value we’re interested in. So, we estimate it using a sample, and then use math to calculate how much wiggle room we should allow for that estimate.

That “wiggle room” is built on two things:

And the Z-value? That’s just your dial for confidence. A 90% confidence level casts a narrower net. A 99% level casts a much wider one. You’re trading off precision for confidence—always.

Scenario: You survey 100 people to find their daily screen time. The sample mean () is 6 hours, and population standard deviation () is 1.2 hours.

So, the 95% confidence interval is [5.76, 6.24]. Interpretation? We’re 95% confident the average daily screen time is between 5.76 and 6.24 hours.

Formula

CI = x̄ ± Z × (σ / √n)

Components Explained

• x̄ (Sample Mean): Average from your sample.

• Z (Z-score): Based on desired confidence level.

• σ (Standard Deviation): Spread of the population data.

• n (Sample Size): Number of observations in the sample.

| Confidence Level (%) | Z-Value |

| 90% | 1.645 |

| 95% | 1.960 |

| 99% | 2.576 |

When to Use Z vs. T Distribution

| Condition | Use Z | Use T |

| Population SD Known | ✅ | ❌ |

| Population SD Unknown | ❌ | ✅ |

| Sample Size > 30 | ✅ | ❌ |

| Sample Size ≤ 30 | ❌ | ✅ |

Here’s where a lot of people get tripped up—understandably so. When we say we’re “95% confident” about an interval, it’s tempting to think that there’s a 95% chance the true population value is inside that range. But that’s not quite right.

Let’s clarify.

A 95% confidence interval means this: if you repeated the same sampling process an infinite number of times—same sample size, same method, over and over—then 95% of the confidence intervals you calculate would contain the actual population mean.

Confidence intervals are built using a formula that includes some random sample data. That means each time you draw a new sample, your confidence interval could shift slightly. But across many repetitions, about 95 out of every 100 of those intervals would hit the bullseye—the true value.

So, the 95% confidence level isn’t telling you about this one interval. It’s telling you about the method used to construct it. That’s a key idea from the Frequentist school of statistics: probability speaks to long-run frequencies, not one-off chances.

Here’s an analogy: imagine a basketball player who sinks 95 out of every 100 free throws. If she takes just one shot, you can’t say there’s a 95% chance she’ll make it—it’s either a hit or a miss. But over time, the pattern emerges. That’s how confidence intervals work too.

| Situation | Use Z | Use T |

| Population SD known | ✅ | ❌ |

| Population SD unknown | ❌ | ✅ |

| Sample size > 30 | ✅ | ❌ |

| Sample size ≤ 30 | ❌ | ✅ |

If you don’t know the population standard deviation or your sample is small, use the t-distribution instead of Z. Why? The t-distribution is wider—it gives you more room for error when there’s more uncertainty, which makes your estimate more reliable in those cases.

Let’s be honest—confidence intervals might sound like a dry statistical concept at first, but they show up in places that impact real decisions, money, health, and even your exam score. Here’s how:

Let’s clear the air—confidence intervals confuse a lot of people, even those who work with data every day. Here are a few common missteps that can seriously trip you up:

What does a 95% confidence interval really mean?

It means that if you repeated the sampling process 100 times under identical conditions, about 95 of those intervals would capture the true population parameter. It’s about the long-run success rate of the method—not a single “luck shot.”

What does a 0.05 confidence interval mean?

That’s a mix-up. 0.05 is typically the significance level (α), not the confidence interval. A 95% CI corresponds to α = 0.05, meaning there’s a 5% chance your interval misses the true value.

How do you interpret a confidence interval?

Think of it as the most reasonable estimate, plus a buffer of uncertainty. It’s a statistically informed guess of where the true population value lies, based on your sample data and how confident you want to be.

What exactly is a confidence interval?

It’s a calculated range, derived from sample data, that is likely to contain the unknown population parameter. Think of it as a “safe zone” for the truth.

Confidence intervals are more than textbook material—they’re fair game on the CFA Level I exam, especially in Quantitative Methods. If you’re looking to truly master them (and not just memorize formulas), try out AnalystPrep’s all-in-one CFA prep platform. You’ll get:

Explore AnalystPrep’s CFA Packages and take your preparation to the next level.

Getting an FRM certification will help you advance your career as a risk... Read More

Here is the question that begs: is the demand for ESG expertise waning... Read More

Get Ahead on Your Study Prep This Cyber Monday! Save 35% on all CFA® and FRM® Unlimited Packages. Use code CYBERMONDAY at checkout. Offer ends Dec 1st.