Model Risk

The objective of this chapter is to identify and explain modeling assumption errors... Read More

After completing this reading, you should be able to:

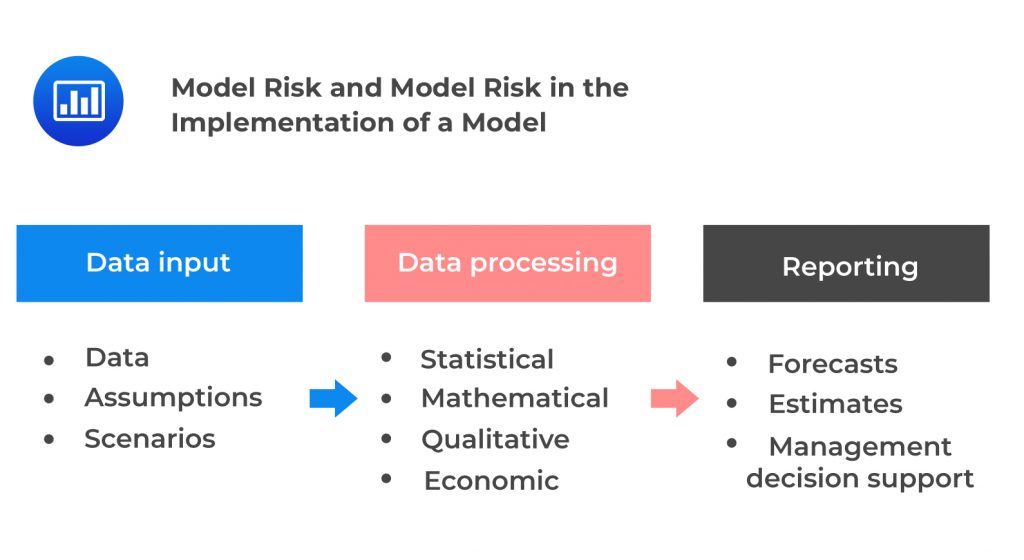

A model is a quantitative method or process that applies statistical, financial, economic, or mathematical techniques and assumptions to analyze input data into quantitative estimates. A model contains three components:

Model Risk

Model RiskModel risk is the likelihood of unfavorable consequences arising from decisions based on incorrect or misused model outputs and reports. The invariable use of models will always present these risks. Model risks can lead to financial losses, poor business, and strategic decisions, or even damage to a bank’s reputation. The following are some of the model-related risk types:

Model risk may arise during the implementation of a model. In other words, the implemented model may stray from its design, making it different from what the designers and users believe it to be. This may occur due to the following:

Implementation error may result in a good model being implemented as a bad model. If the implemented model deviates from its design, then it is a different model from what the designer and users believe it to be. This may result in unpredictable outputs. Implementation error is generally occasioned by human error. With extensive software projects, some coding or logic errors are inevitable.

The board of directors and senior management approve model risk governance at the highest level. They do this by establishing a bank-wide approach to model risk management. A bank’s board and senior management need to build a strong model risk management framework that fits into the organization’s broader risk management. That framework should include model development, implementation, use, and validation standards. A model risk function that reports to the Chief Risk Officer (CRO) should be established. Besides, the model risk function should be responsible for the model risk management (MRM) framework and governance. There is a need for an independent model validation function responsible for the validation of models.

Managing a model’s lifecycle requires consideration of various factors to maintain its quality. This involves understanding the model development, documentation, validation, inventory, and follow-up. Banks should keep a complete inventory of every existing model to facilitate MRM and keep a record of all uses, changes, and approval status. Documentation of model development should be sufficiently detailed to enable a proper understanding of how the model works, its limitations, and the key assumptions. Documentation should, at least, include the following: data sources, model methodology report, model calibration report, test plan, user manual, technical environment, and operational risk.

Independent model validation ensures that the model performs as expected, meets the bank’s needs, and meets regulatory requirements. Model validation covers the following: model purpose, model design, assumptions and development, performance, usage, and end-to-end model documentation, among others. No conflict of interest should arise during the model validation process. Banks should have a decision-model performance monitoring system that allows for early detection of deviations from targets and for remedial or preventative action to be taken. A comprehensive follow-up may examine the following: statistical model, decision strategies, and expert adjustments, among others.

Optimized processes and technological platforms should support the MRM framework. The technology infrastructure is an important component of the model risk management framework. There should be good documentation of the interconnectivity between the source data systems, analytical engines, and reporting platforms, with clearly stated rationale for platform choices. For the underlying systems, the completeness, accuracy, and timeliness of the data that feed models should be a top priority. There should be a clear separation between input, model, and output data. The choice process between the internal and vendor systems should be clearly articulated.

Additionally, there is a need for a robust vendor selection process undertaken independently so that the bank can consider how the systems fit within the current infrastructure. Some financial institutions are changing from vendor solutions to open-source modeling platforms, such as Python, Julia, and R, in areas such as machine learning and derivatives pricing, among others. The motivation for this is transparency, speed, cost, flexibility, and audibility. Models need to pass regulatory tests and, at the same time, enable strategic decision-making.

This assessment involves the periodic activities that occur after a model has been approved for use. This helps verify the appropriate usability and functionality of the model. After the model is validated and approved, it can be used officially for internal reporting within a bank. Once the validation is done and the regulation test completed, there is a risk of complacency. There are potential errors that can occur even after validation and approval.

Models usually generate reports. In fact, this is the most visible result of a model. Ongoing monitoring should continue throughout a model’s life to enable tracking of known model limitations and to identify new ones. Model outputs can as well be verified through the use of appropriate benchmarks, which identify any rapid divergence. Discrepancies between the model output and benchmarks provide a need for further investigation into the sources and degree of the differences.

The note on supervisory guidance on model risk management, also referred to as SR 11-7, provides a guideline for the whole process of model risk management for the banking sector. The following are some of the best practices banks should adhere to during model development and implementation.

A clear statement of purpose: The model development and implementation process should start with a clear statement of purpose so that it does not divert from its intended purpose. There should be clear documentation entailing the model design, theory, and logic supported by published research and industry practice. All the merits and limitations of the various model techniques, mathematical specifications, numerical techniques, and approximations should be clearly explained.

A careful assessment of data quality and relevance with appropriate documentation: Data is essential in the development of a model. There is a need to ensure that the quality of the data obtained does not compromise the model’s validity. Developers should explain the importance and relevance of data in the model and how it will assist in achieving the model’s intended purpose. All the limitations of the data and information used concerning representation and assumptions should also be explained.

Model testing: This is an essential part of model development. It involves the evaluation of various model components and their overall functionality and assessing whether they are performing as intended. The accuracy of a model, its potential limitations, the model’s behavior over a range of input values, and the impact of assumptions (this helps know situations where the model might be unreliable) are all assessed during the testing. Testing activities should also be appropriately documented.

The sound development of judgmental and qualitative aspects: A model may be adjusted to modify the statistical outputs with judgmental and qualitative aspects. These adjustments should be conducted appropriately and systematically. Besides, the adjustments should be appropriately documented.

Calculations of the model should be appropriately coordinated with the capabilities and requirements of information systems: Models are usually incorporated in larger information systems that manage data flowing into a model from various sources and handle the aggregation and reporting of model outcomes. All model calculations must be appropriately coordinated with the capabilities and requirements of information systems. The model risk management team relies on substantial investment in supporting systems to ensure data and reporting integrity. Further such investments enable the model risk management team to secure controls and run tests to ensure proper implementation of models, effective systems integration, and appropriate use.

Model validation involves a set of processes and activities intended to demonstrate that models are performing as per the design objectives and business uses. Further, model validation identifies the potential model limitations and assumptions. Finally, it assesses the possible impact of these limitations. The following are the critical elements of a robust model validation process.

This entails the assessment of the quality of the model design, theory, and logic. In this process, the model documentation and justifications for the methods used and variables selected for the model are reviewed. Model documentation and testing help understand the limitations and assumptions of the model. Documented evidence should support all model choices, for example, data, the overall theoretical construction, assumptions, and specific mathematical calculations.

Conversely, validation ensures an informed judgment in model design and development, which is consistent with published research as well as industry practice. All model aspects are subjected to critical analysis. The analysis involves the evaluation of the quality and the extent of developmental evidence and conducting additional analysis and testing.

Monitoring starts after a model is first implemented in production systems for an actual bank’s use. Monitoring should continue over time, depending on the availability of new data and the nature of the model, among other factors. Ongoing monitoring ensures that the model is correctly implemented and used appropriately. It also plays an essential part in evaluating product changes, exposures, clients, or even market conditions and their implications in the current model. This determines the need for adjustment, redevelopment, or even replacement of the current model to fit those changes and whether the extension is valid.

Banks need to design a program of ongoing testing and evaluation of the performance of a model. This should be done together with procedures that respond to any teething problems. The program should include process verification and benchmarking:

This involves a comparison of the output of a model to the corresponding actual outcomes. The comparison depends on the set objectives of the model, which may include an assessment of the accuracy of estimates and an evaluation of rank-ordering ability, among others. These comparisons form a tool for evaluating the model’s performance. They do so by establishing the expected ranges of the outcomes with respect to the intended objectives. Further, they assess the reasons for the observed variation. If the outcomes analysis gives evidence of poor performance, the bank is expected to take action to address the issues. Outcomes analysis relies on statistical tests or other quantitative measures. Outcomes analysis is conducted on an ongoing basis to ensure that the model continues to perform in line with design objectives and the bank’s uses.

SR 11-7 provides guidelines for an effective model validation process that banks need to adhere to. The following are some of the challenges banks face if they adhere to those requirements.

Use of vendor or third-party models: According to SR 11-7, all models, either internally developed or purchased, should be validated with the same rigor. However, there is a lack of vendor transparency regarding intellectual property. This may require banks to relax their rigor in the validation process and just rely on benchmarking, and outcome analysis, among other methods.

Model documentation: According to SR 11-7, model documentation should have enough details and clarity in a way that any knowledgeable third party can use the documentation to recreate the model without access to the model development code. However, some newer models, for example, machine learning models, have a much more complex development process. This makes the documentation process more challenging. Even then, SR 11-7 recommends standardization of the model development process and provides documentation as required.

The verification process: SR 11-7 requires banks to use models that the independent verification team has approved. Some models are too complex, even for the verification team to understand. Therefore, the verification team should have the necessary training to understand all the possible models a bank might use.

Explainability challenge: SR 11-7 requires the explainability of a model design as well as the selection of variables. This becomes challenging, especially when the model in question has some complex neural networks. These models may end up being rejected due to lack of explainability even though they may perform better than some standard models.

Conceptual soundness: Assessing the conceptual soundness of a model entails assessing the quality of the design and construction, review of the documentation, and confirming the soundness of the selected variables. This requires the validation practitioners to be familiar with all the possible models banks can adopt.

However, this is not entirely possible since new techniques always emerge. Similarly, new models with higher complexities are invented day in and day out. This makes it difficult for the validation team to assess the fitness-for-purpose and the suitability of these models for the intended application.

Practice Question

At an annual strategy meeting of a rapidly expanding fintech company, the CEO brings up concerns highlighted by regulatory authorities regarding the company’s approach to model risk management. The head of Risk Management is tasked with proposing changes in alignment with the best practices set out by central banking regulations. Which of the following recommendations should the Risk Manager prioritize to align with regulatory best practices?

A. Assign the team responsible for model development to also handle model validation and periodic backtests.

B. Initiate routine monitoring protocols for a model exclusively after its preliminary backtests demonstrate no issues.

C. Rely solely on the predefined assumptions of third-party models, ensuring that they’re employed exactly as the external providers intended.

D. Cultivate an ongoing, dynamic process for model risk management, rather than limiting evaluations to predetermined intervals.Solution

The correct answer is D.

Establishing a continuous process for model risk management ensures that potential risks are identified and addressed in real time, as financial landscapes and risk profiles evolve. It’s in line with best practices for risk management which emphasize ongoing assessment rather than periodic checks.

A is incorrect. Combining the roles of model development and validation in the same team can lead to conflicts of interest. An independent validation is crucial to objectively assess the model’s efficacy and flaws. When developers also validate, they may overlook issues they introduced or be biased towards the model’s correctness.

B is incorrect. Models should be monitored continuously, starting from their initial deployment, irrespective of the backtest results. Waiting for successful backtests can introduce a delay in identifying model issues that may arise in real-world scenarios different from backtest conditions. Additionally, backtesting is just one part of model validation; continuous monitoring can catch issues that backtests might miss.

C is incorrect. While third-party models come with their own set of assumptions, it is essential for companies to validate these models based on their unique operations and risk exposures. Blindly accepting external assumptions can lead to incorrect risk assessments and potential model failures in the company’s specific context.

Things to Remember

- Model risk management pertains to the identification, assessment, and mitigation of risks arising from the use of models in financial decision-making.

- Models, though designed to capture reality, are simplifications and can lead to errors if not appropriately managed, understood, or used.

- Risks can emerge from data inaccuracies, incorrect assumptions, or flaws in the model’s structure or design.

- Proper validation, including both development and independent verification, is crucial for a model’s reliability and to avoid potential biases.