Calculate variance, standard deviation ...

Variance and Standard Deviation for Conditional Discrete Distributions In the previous readings, we... Read More

In the previous reading, we looked at joint discrete distribution functions. In this reading, we will determine conditional and marginal probability functions from joint discrete probability functions.

Suppose that we know the joint probability distribution of two discrete random variables, \(\mathrm{X}\) and \(\mathrm{Y}\) and that we wish to obtain the individual probability mass function for \(\mathrm{X}\) or \(\mathrm{Y}\). These individual mass functions of \(X\) and \(Y\) are referred to as the marginal probability distribution of \(X\) and \(Y\), respectively.

Once we have these marginal distributions, we can analyze the two variables separately.

Recall that the joint probability mass function of the discrete random variables \(\mathrm{X}\) and \(\mathrm{Y}\), denoted as \(\mathrm{f}_{\mathrm{XY}}(\mathrm{x}, \mathrm{y})\), satisfies the following properties:

Let \(\mathrm{X}\) and \(\mathrm{Y}\) have the joint probability mass function \(\mathrm{f}(\mathrm{x}, \mathrm{y})\) defined on the space \(\mathrm{S}\).

The probability mass function of \(\mathrm{X}\) alone, which is called the marginal probability mass function of \(\mathbf{X}\), is given by:

$$

f_x(x)=\sum_y f(x, y)=P(x=x), \quad x \in S_x

$$

Where the summation is taken over all possible values of \(\mathrm{y}\) for each given \(\mathrm{x}\) in space \(\mathrm{S}_{\mathrm{X}}\).

Similarly, the marginal probability mass function of \(\mathrm{Y}\) is given by:

$$

f_y(y)=\sum_x f(x, y)=P(Y=y), \quad y \in S_y

$$

where the summation is taken over all possible values of \(\mathrm{x}\) for each given \(\mathrm{y}\) in space \(\mathrm{S}_{\mathrm{y}}\).

Example 1: Marginal Probability Mass Function

Suppose that the joint p.m.f of \(X\) and \(Y\) is given as:

$$

f(x, y)=\frac{x+y}{21}, x=1,2 \quad y=1,2,3

$$

a. We know that \(\mathrm{f}_{\mathrm{X}}(\mathrm{x})\) is given by:

$$

f_x(x)=\sum_y f(x, y)=P(x=x), \quad x \in S_x

$$

Thus,

$$

\begin{aligned}

\mathrm{f}_{\mathrm{x}}(\mathrm{x}) &=\sum_{\mathrm{y}=1}^3 \frac{\mathrm{x}+\mathrm{y}}{21} \\

&=\frac{\mathrm{x}+1}{21}+\frac{\mathrm{x}+2}{21}+\frac{\mathrm{x}+3}{21}=\frac{3 \mathrm{x}+6}{21}, \text { for } \mathrm{x}=1,2

\end{aligned}

$$

b. Similarly,

$$

f_y(y)=\sum_x f(x, y)=P(Y=y), \quad y \in S_y

$$

Thus,

$$

\begin{aligned}

\mathrm{f}_{\mathrm{Y}}(\mathrm{y}) &=\sum_{\mathrm{x}=1}^2 \frac{\mathrm{x}+\mathrm{y}}{21} \\

&=\frac{1+\mathrm{y}}{21}+\frac{2+\mathrm{y}}{21}=\frac{3+2 \mathrm{y}}{21}, \text { for } \mathrm{y}=1,2,3

\end{aligned}

$$

\(X\) and \(Y\) have the joint probability function as given in the table below:

$$\begin{array}{|c|c|c|c|} X & {0} & {1} & {2} \\ {\Huge{\diagdown} } & & & \\ Y & & & \\ \hline1 & 0.12& 0.15& 0 \\

\hline 2 & 0.13& 0.05& 0.03\\

\hline 3 & 0.18& 0.06 & 0.07\\

\hline 4 &0.17& 0.04& \\ \end{array}$$

Determine the probability distribution of \(X\).

We know that:

$$

f_x(x)=\sum_y f(x, y)=P(x=x), \quad x \in S_x

$$

So that the marginal probabilities are:

$$

\begin{align}

&\text{P}(\text{X}=0)=0.12+0.13+0.18+0.17=0.6 \\

&\text{P}(\text{X}=1)=0.15+0.05+0.06+0.04=0.3 \\

&\text{P}(\text{X}=2)=0.03+0.07=0.1

\end{align}

$$

Therefore, the probability distribution of \(X\) is:

$$\begin{array}{|l|c|c|c|}

\hline \text{X} & 0& 1 & 2\\

\hline \text{P}(\mathrm{X}=\text{x})& 0.6& 0.3 & 0.1\\

\hline \end{array}$$

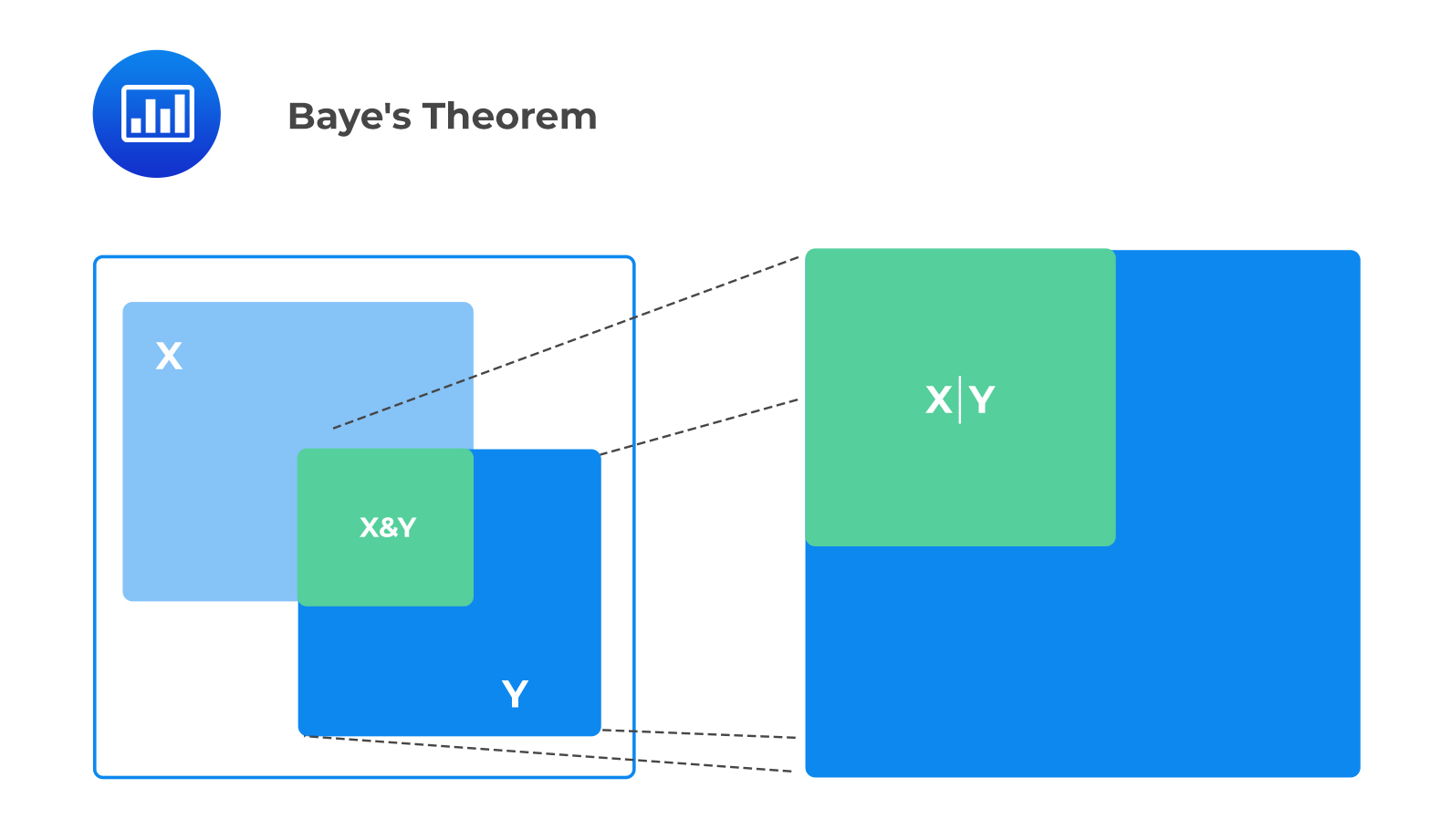

Conditional probability is a key part of Baye’s theorem. In plain language, it is the probability of one thing being true, given that another thing is true. It differs from joint probability, which is the probability that both things are true without knowing that one of them must be true. For instance:

We can use a Euler diagram to illustrate the difference between conditional and joint probabilities. Note that, in the diagram, each large square has an area of 1.

Let \(\text{X}\) be the probability that a patient’s left kidney is infected, and let \(\text{Y}\) be the probability that the right kidney is infected. The green area on the left side of the diagram represents the probability that both of the patient’s kidneys are affected. This is the joint probability (X, Y). If \(Y\) is true (e.g., given that the right kidney is infected), then the space of everything, not \(Y\), is dropped, and everything in \(\text{Y}\) is rescaled to the size of the original space. The rescaled green area on the right-hand side is now the conditional probability of \(\text{X}\) given \(\text{Y}\), expressed as \(\text{P}(\text{X} \mid \text{Y})\). Put differently, this is the probability that the left kidney is infected if we know that the right kidney is affected.

It is worth noting that the conditional probability of \(\text{X}\) given \(\text{Y}\) is not necessarily equal to the conditional probability of \(\text{Y}\) given \(\text{X}\).

Recall that for the univariate case, the conditional distribution of an event A given \(\text{B}\) is defined by:

$$

P(A \mid B)=\frac{P(A \cap B)}{P(B)}, \quad \text { provided that } P(B)>0

$$

Where event \(B\) happened first and impacted how \(A\) occurred. We can extend this idea to multivariate distributions.

Let \(\text{X}\) and \(\text{Y}\) be discrete random variables with joint probability mass function, \(\text{f}(\text{x}, \text{y})\) defined on the space \(\text{S}\).

Also, let \(\text{f}_{\text{x}}(\text{x})\) and \(\text{f}_{\text{y}}(\text{y})\) be the marginal distribution function of \(\text{X}\) and \(\text{Y}\), respectively.

The conditional probability mass function of \(\text{X}\), given that \(\text{Y}=\text{y}\), is given by:

$$

\text{g}(\text{x} \mid \text{y})=\frac{\text{f}(\text{x}, \text{y})}{\text{f}_{\text{Y}}(\text{y})}, \quad \text { provided that } \text{f}_{\text{Y}}(\text{y})>0

$$

Let \(\text{X}\) be the number of days of sickness over the last year, and let \(\text{Y}\) be the number of days of sickness this year. \(X\) and \(Y\) are jointly distributed as in the table below:

$$\begin{array}{|c|c|c|c|} X & {0} & {1} & {2} \\ {\Huge{\diagdown} } & & & \\ Y & & & \\ \hline1 & 0.1& 0.1& 0 \\

\hline 2 & 0.13& 0.1& 0.2\\

\hline 3 & 0.2& 0.1 & 0.1\\

\end{array}$$

Determine:

i) The marginal probability mass function of \(\text{X}\) is given by:

$$

\text{P}(\text{X}=\text{x})=\sum_{\text{y}} \text{P}(\text{x}, \text{y}) \quad \text{x} \in \text{S}_{\text{x}}

$$

Therefore, we have:

$$\begin{aligned}

&\text{P}(\text{X}=0)=0.1+0.1+0.2=0.4 \\

&\text{P}(\text{X}=1)=0.1+0.1+0.1=0.3 \\

&\text{P}(\text{X}=2)=0+0.2+0.1=0.3

\end{aligned}

$$

When represented in a table, the marginal distribution of \(\text{X}\) is:

$$\begin{array}{c|c|c|c} \text{X} & 0 & 1 & 2 \\

\hline \text{P}(\text{X}=\text{x}) & 0.4 & 0.3& 0.3\\

\end{array}$$

ii) Similarly,

$$

\text{P}(\text{Y}=\text{y})=\sum_{\text{x}} \text{P}(\text{x}, \text{y}), \quad \text{y} \in \text{S}_{\text{y}}

$$

Therefore, we have:

$$

\begin{align}

&\text{P}(\text{Y}=1)=0.1+0.1+0=0.2 \\

&\text{P}(\text{Y}=2)=0.1+0.1+0.2=0.4 \\

&\text{P}(\text{Y}=3)=0.2+0.1+0.1=0.4

\end{align}$$

Therefore, the marginal distribution of \(\text{Y}\) is:

$$\begin{array}{c|c|c|c}

\text{Y}& 1 & 2 & 3 \\

\hline \text{P}(\text{Y}=\text{y}) & 0.2 & 0.4 & 0.4\\

\end{array}$$

iii) Using the definition of conditional probability:

$$

\begin{gathered}

\text{P}(\text{Y}=1 \mid \text{X}=2)=\frac{\text{P}(\text{Y}=1, \text{X}=2)}{\text{P}(\text{X}=2)}=\frac{0}{0.3}=0 \\

\text{P}(\text{Y}=2 \mid \text{X}=2)=\frac{\text{P}(\text{Y}=2, \text{X}=2)}{\text{P}(\text{X}=2)}=\frac{0.2}{0.3}=0.67 \\

\text{P}(\text{Y}=3 \mid \text{X}=2)=\frac{\text{P}(\text{Y}=3, \text{X}=2)}{\text{P}(\text{X}=2)}=\frac{0.1}{0.3}=0.33

\end{gathered}

$$

The conditional distribution is, therefore,

$$

\text{p}(\text{y} \mid \text{x}=2)= \begin{cases}0, & \text{y}=1 \\ 0.67 & \text{y}=2 \\ 0.33, & \text{y}=3\end{cases}

$$

An actuary determines that the number of monthly accidents in two towns, \(\text{M}\) and \(\text{N}\), is jointly distributed as

$$

f(x, y)=\frac{5 x+3 y}{81}, \quad x=1,2, \quad y=1,2,3

$$

Let \(\text{X}\) and \(\text{Y}\) be the number of monthly accidents in towns \(\text{M}\) and \(\text{N}\), respectively.

Find

a)

We know that$$

h(y \mid x)=\frac{f(x, y)}{f_X(x)}

$$

The marginal pmf of \(\text{X}\) is

$$

\begin{aligned}

\text{f}_{\text{X}}(\text{x}) &=\sum_{\text{y}} \text{f}(\text{x}, \text{y})=\text{P}(\text{X}=\text{x}), \quad \text{x} \in \text{S}_{\text{x}} \\

&=\sum_{\text{y}=1}^3 \frac{(5 \text{x}+3 \text{y})}{81} \\

&=\frac{5 \text{x}+3(1)}{81}+\frac{5 \text{x}+3(2)}{81}+\frac{5 \text{x}+3(3)}{81}=\frac{15 \text{x}+18}{81} \\

& \therefore \text{f}_{\text{X}}(\text{x})=\frac{15 \text{x}+18}{81}, \quad \text{x}=1,2 \end{aligned}$$

Thus,

$$

h(y \mid x)=\frac{\frac{5 x+3 y}{81}}{\frac{15 x+18}{81}}=\frac{5 x+3 y}{15 x+18}, \quad y=1,2,3, \text { when } x=1 \text { or } 2

$$

b) Similarly, the marginal pmf of \(Y\) is given by:

$$

\begin{align}

\text{f}_{\text{Y}}(\text{y}) &=\sum_{\text{x}} \text{f}(\text{x}, \text{y})=\text{P}(\text{Y}=\text{y}), \quad \text{y} \in \text{S}_{\text{y}} \\

&=\sum_{\text{x}=1}^2 \frac{5 \text{x}+3 \text{y}}{81} \\

&=\frac{5(1)+3 \text{y}}{81}+\frac{5(2)+3 \text{y}}{81}

\end{align}

$$

$$

\therefore \text{f}_{\text{Y}}(\text{y})=\frac{15+6 \text{y}}{81} \text{y}=1,2,3 \text {. }

$$

Now,

$$

\begin{gathered}

g(x \mid y)=\frac{f(x, y)}{f_Y(y)} \\

=\frac{\frac{5 x+3 y}{81}}{\frac{15+6 y}{81}}=\frac{5 x+3 y}{15+6 y} \\

\therefore g(x \mid y)=\frac{5 x+3 y}{15+6 y} \quad x=1,2 \text { when } y=1 \text { or } 2 \text { or } 3

\end{gathered}

$$

We can use conditional distributions to find conditional probabilities.

For example,

$$

\text{P}(\text{Y}=1 \mid \text{X}=2)=\text{h}(1 \mid 2)=\text{h}(\text{y} \mid \text{x})=\frac{5 * 2+3 * 1}{15 * 2+18}=\frac{13}{48}

$$

If we find all the probabilities for this conditional probability function, we would see that they behave similarly to the joint probability mass functions seen in the previous reading.

Now, let’s keep \(\text{X}=\text{2}\) fixed and check this:

$$

\text{P}(\text{Y}=2 \mid \text{X}=2)=\text{h}(2 \mid 2)=\frac{5 * 2+3 * 2}{15 * 2+18}=\frac{16}{48}

$$

and,

$$

\text{P}(\text{Y}=3 \mid \text{X}=2)=\text{h}(3 \mid 2)=\frac{5 * 2+3 * 3}{15 * 2+18}=\frac{19}{48}

$$

Summing up the above probabilities, we get

$$

\text{P}(\text{Y} \mid \text{X}=2)=\text{h}(1 \mid 2)+\text{h}(2 \mid 2)+\text{h}(3 \mid 2)=\frac{13}{48}+\frac{16}{48}+\frac{19}{48}=1

$$

Note that the same occurs for the conditional distribution of \(\text{X}\) given \(\text{Y}, \text{g}(\text{x} \mid \text{y})\).

Thus, \(\text{h}(\text{y} \mid \text{x})\) and \(\text{g}(\text{x} \mid \text{y})\) both satisfy the conditions of a probability mass function, and we can do the same operations we did on joint discrete probability functions.

Learning Outcome:

Topic 3. b: Multivariate Random Variables-Determine conditional and marginal probability functions for discrete random variables only.

Get Ahead on Your Study Prep This Cyber Monday! Save 35% on all CFA® and FRM® Unlimited Packages. Use code CYBERMONDAY at checkout. Offer ends Dec 1st.